Detection-by-Localization: Maintenance-Free Change Object Detector

Tanaka Kanji

Keywords

Abstract

Recent researches demonstrate that self-localization performance is a very useful measure of likelihood-of-change (LoC) for change detection. In this paper, this “detection-by-localization” scheme is studied in a novel generalized task of object-level change detection. In our framework, a given query image is segmented into object-level subimages (termed “scene parts”), which are then converted to subimage-level pixel-wise LoC maps via the detection-by-localization scheme. Our approach models a self-localization system as a ranking function, outputting a ranked list of reference images, without requiring relevance score. Thanks to this new setting, we can generalize our approach to a broad class of self-localization systems. Our ranking based self-localization model allows to fuse self-localization results from different modalities via an unsupervised rank fusion derived from a field of multi-modal information retrieval (MMR). This study is a first step towards a maintenance-free approach, minimizing maintenance cost of map maintenance system (e.g., background model, detector engine).

Related document

BibTeX

@inproceedings{DBLP:conf/icra/Kanji19,

author = {Tanaka Kanji},

title = {Detection-by-Localization: Maintenance-Free Change Object Detector},

booktitle = {International Conference on Robotics and Automation, {ICRA} 2019,

Montreal, QC, Canada, May 20-24, 2019},

pages = {4348--4355},

publisher = {{IEEE}},

year = {2019},

url = {https://doi.org/10.1109/ICRA.2019.8793482},

doi = {10.1109/ICRA.2019.8793482},

timestamp = {Thu, 28 Sep 2023 20:45:52 +0200},

biburl = {https://dblp.org/rec/conf/icra/Kanji19.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

図表・写真

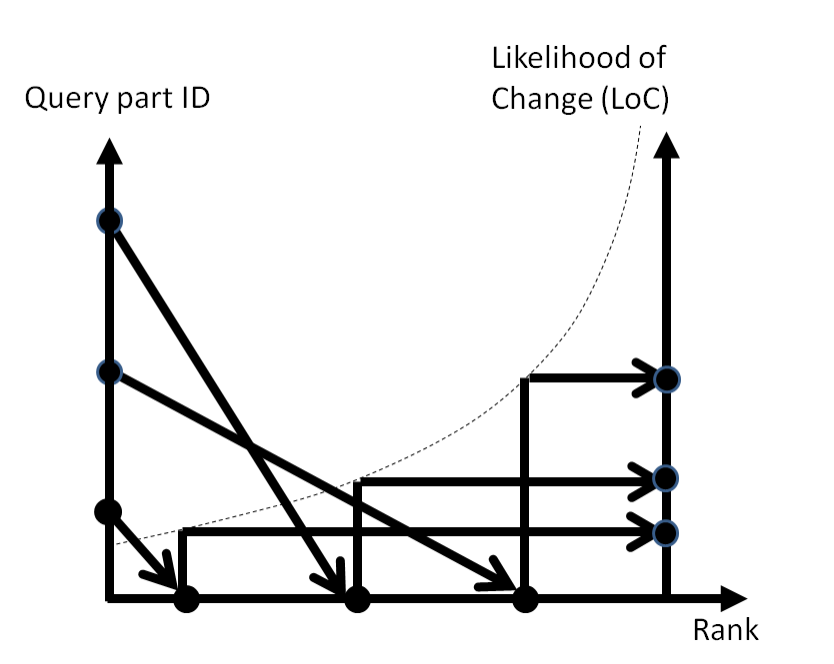

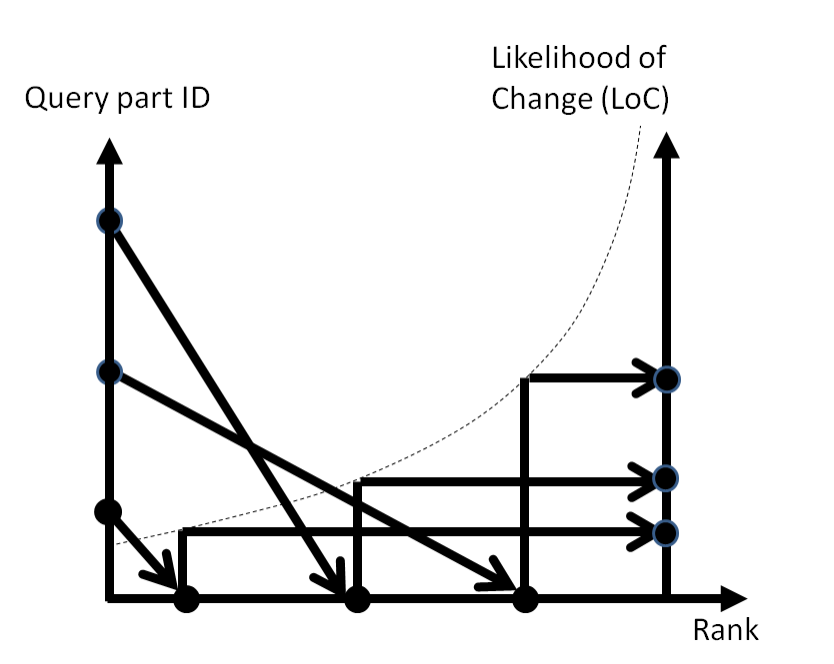

Fig. 1. In the detection-by-localization scheme, self-localization performance (i.e., rank value of the “ground-truth” reference

image in the map database) is used as a measure of likelihood-of-change (LoC) for change detection. The new generalized problem

of object-level change detection, addressed in this paper, takes object-level subimages (instead of a full image) as query input

for the self-localization, and outputs a subimage-level pixel-wise LoC map.

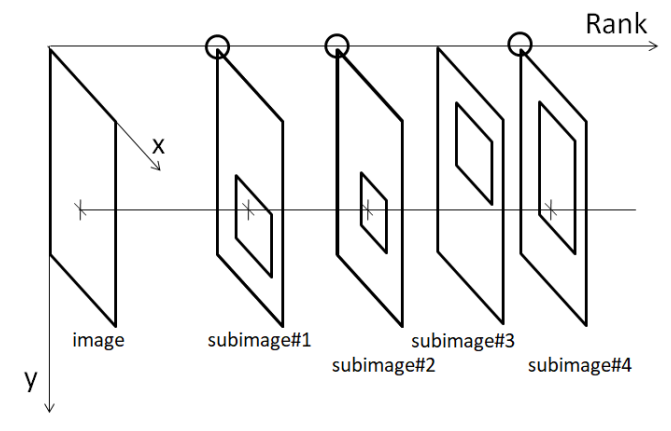

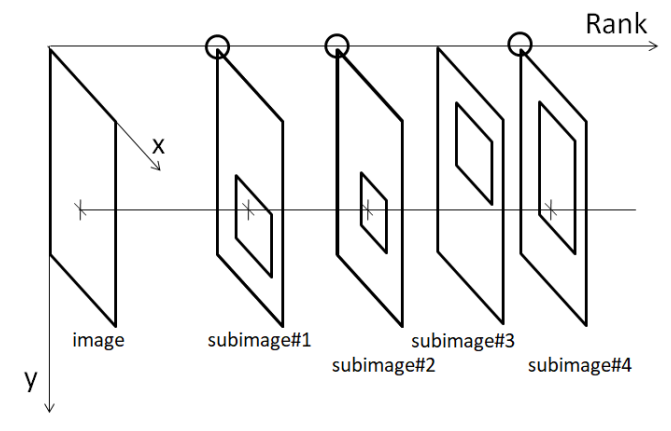

Fig. 2. Estimating LoC map. We consider the new setting where each pixel may belong to multiple subimages (referred to as qBBs).

Each subimage is then input to a self-localization system to obtain a rank value, and then the rank value of each pixel is computed

from these subimages which the pixel belongs to. In the figure, a rectangle indicates the bounding box of an image or a subimage,

and a ’+’ mark indicates the pixel of interest.

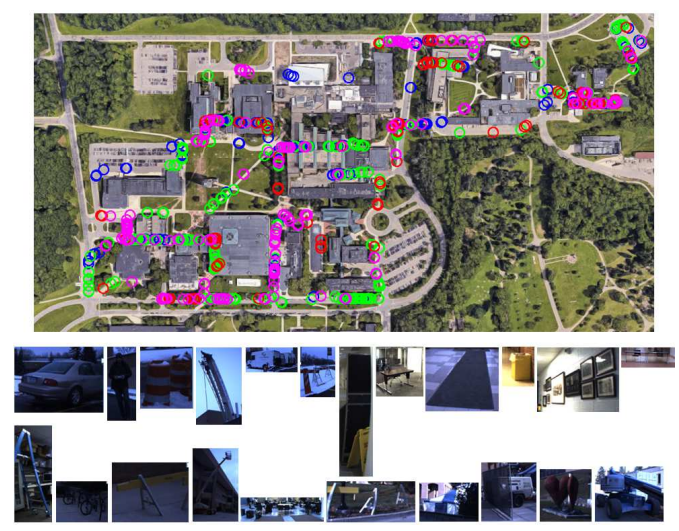

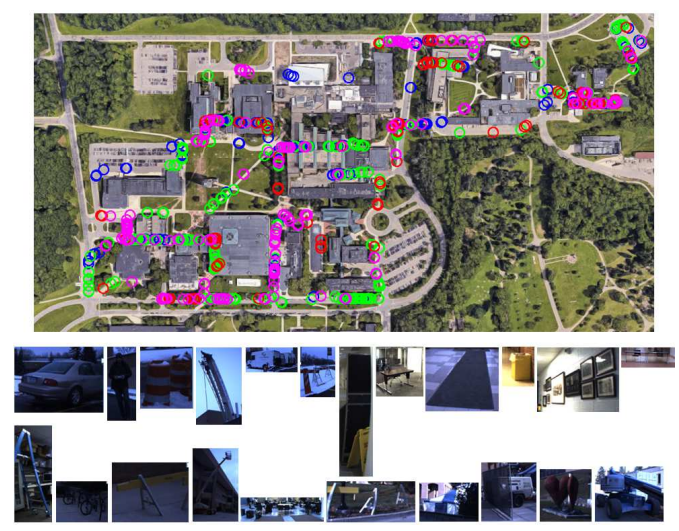

Fig. 3. Experimental settings. Top: the workspace and the robot’s viewpoints of the test images. Bottom: examples of change objects.

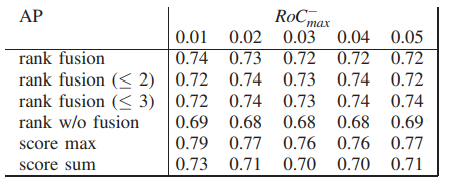

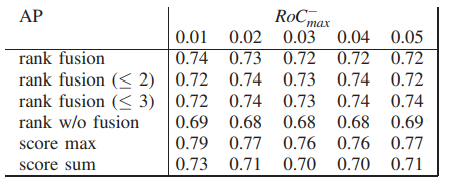

Table1:PERFORMANCE RESULTS